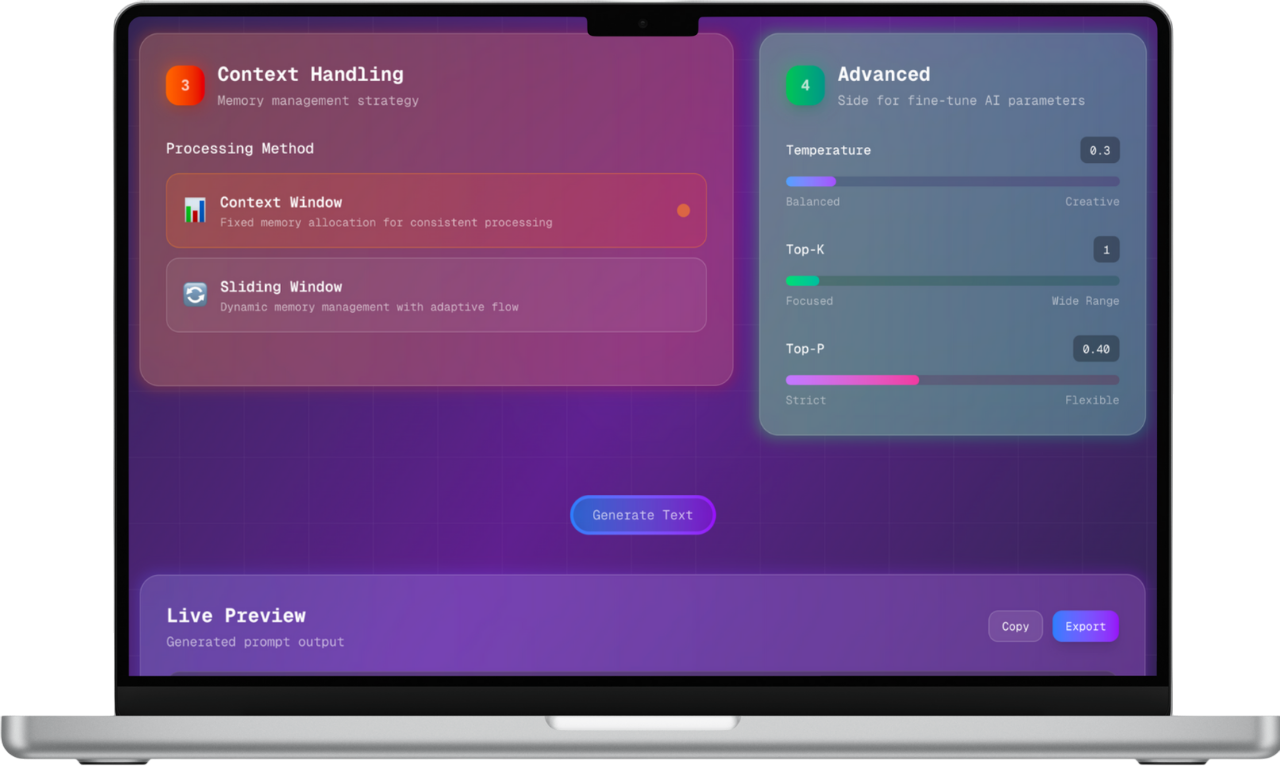

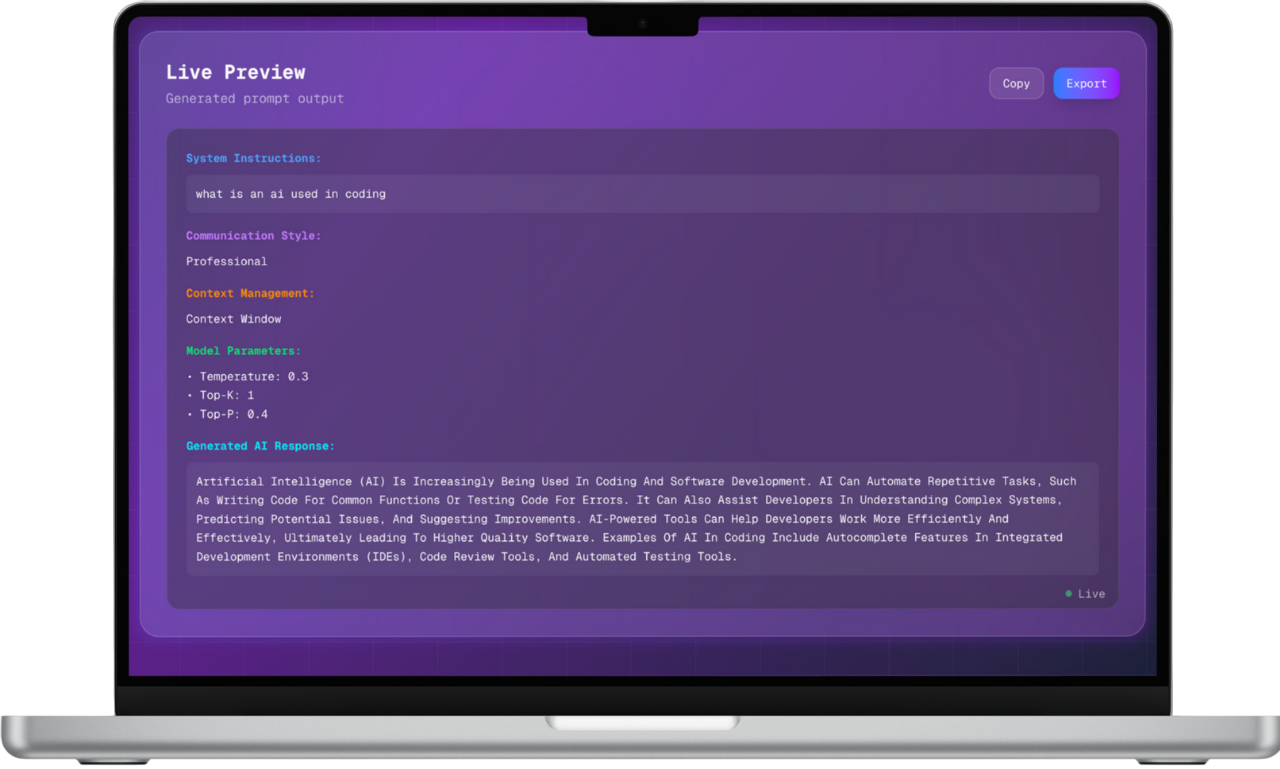

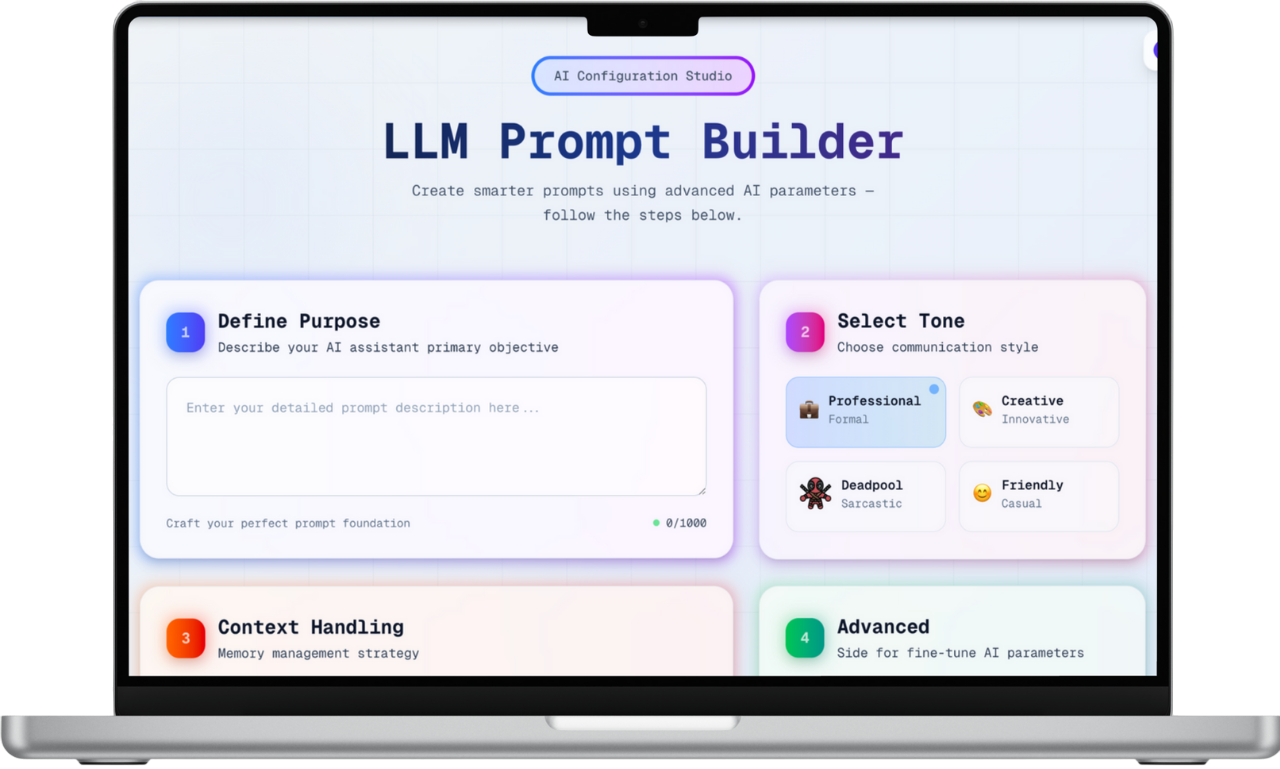

Ever wondered how changing parameters affects your AI prompt?

.png)

Tools & Technologies

Next.js + Python + FastAPI + Hugging Face + LangChain

%20copy.png)

What This Project Does

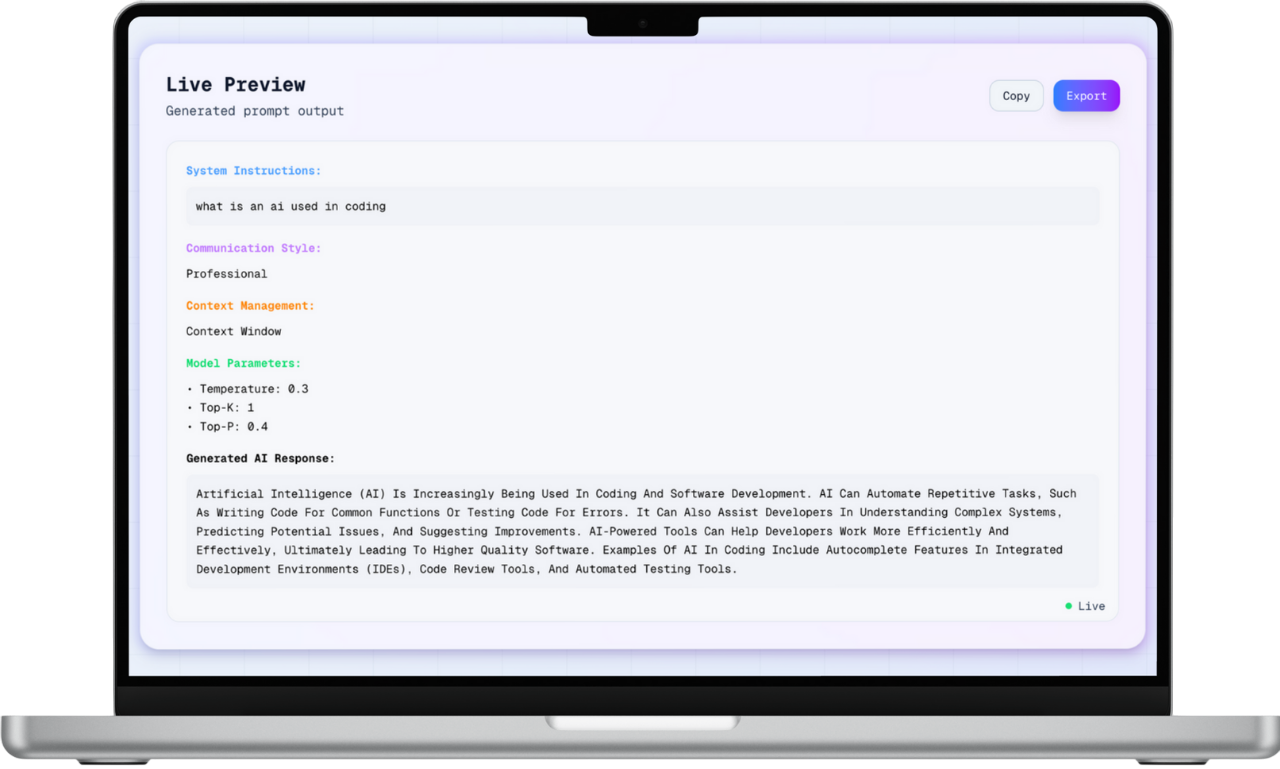

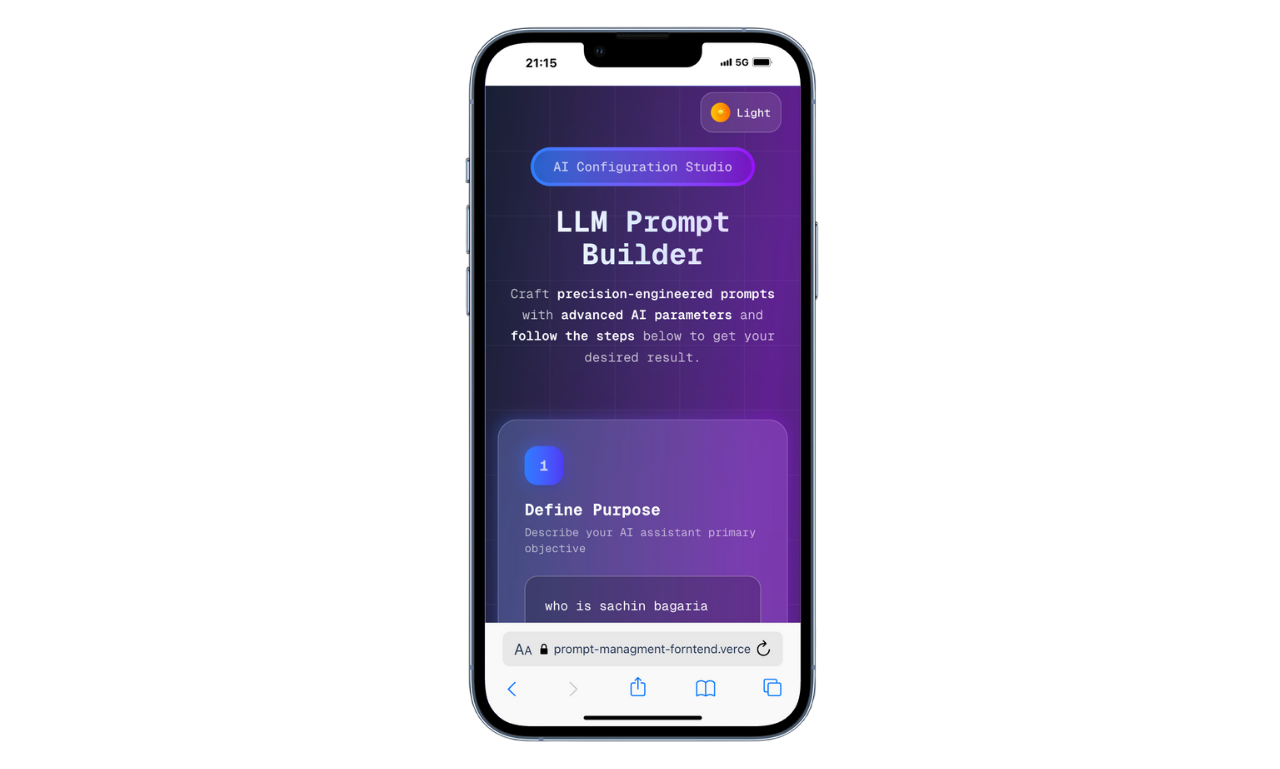

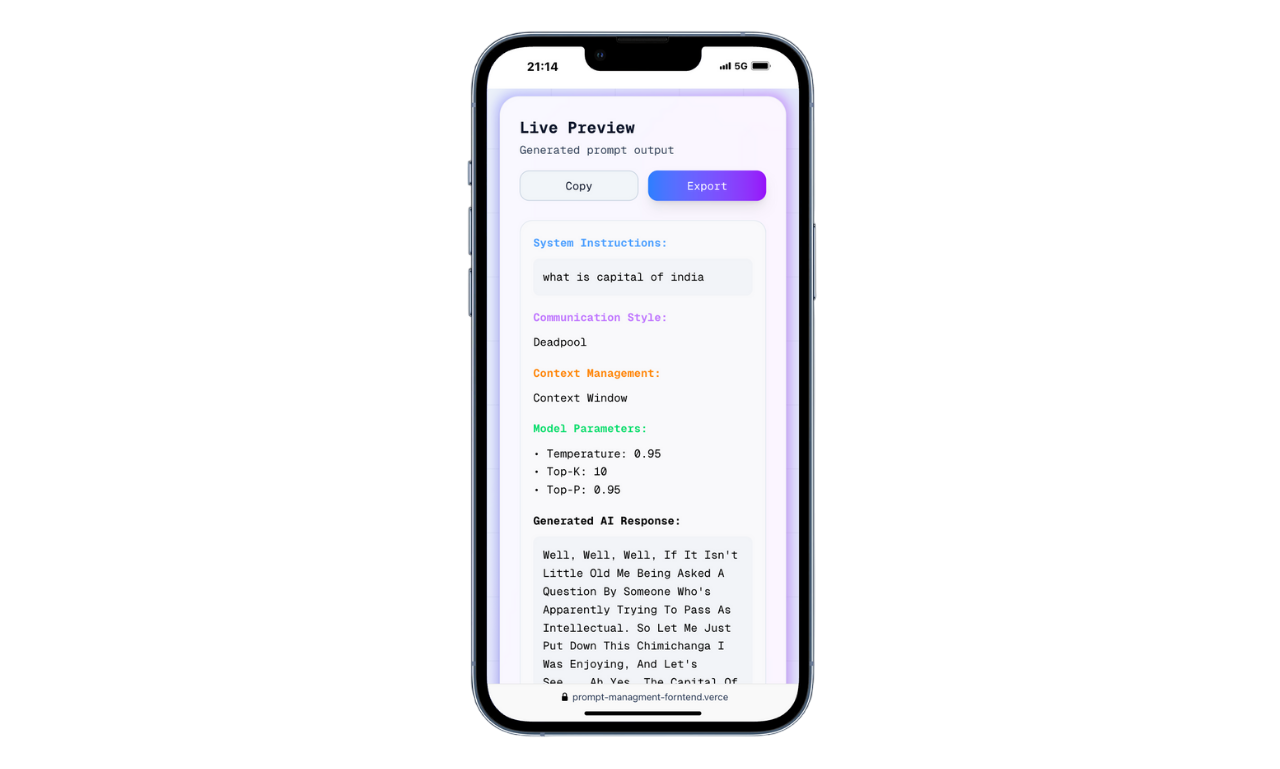

This tool was built to solve a common challenge in prompt engineering, not knowing how LLM parameters like temperature, top-k, top-p, or tone actually affect the output. I wanted to make that process more visual, hands-on, and beginner-friendly. So I built an interactive web app using Next.js on the frontend, giving users a fast, responsive interface to play with these settings in real time. The backend is powered by FastAPI (with Python) to process prompts quickly and route them to the LLM. For the AI logic, I integrated Hugging Face models through LangChain, which made it super flexible to experiment with different tones like friendly, sarcastic, or professional and instantly see the difference. From a performance and SEO point of view, the app scores over 90+ on Google PageSpeed Insights, ensuring it's not just functional, but also fast and optimized for visibility. Overall, it’s a small but powerful playground for anyone who wants to truly understand how LLMs behave with different inputs. Link - https://prompt-managment-forntend.vercel.app